You have to know when to hold them, and when to fold them. That's the not just slightly smug assessment by IBM executives as they reflect -- with twinkles in their eyes -- on the months-stalled Oracle acquisition of Sun Microsystems, a deal that IBM initially sought but then declined earlier this year.

Chatting over drinks at the end of day one of the Software Analyst Connect 2009 conference in Stamford, Conn., IBM Senior Vice President and IBM Software Group Executive Steve Mills told me last night he thinks the Oracle-Sun deal will go through, but it won't necessarily be worth $9.50 a share to Oracle when it does.

"He (Oracle Chairman Larry Ellison) didn't understand the hardware business. It's a very different business from software," said Mills.

Mills seemed very much at ease with IBM's late-date jilt of Sun (Sun was apparently playing hard to get in order to get more than $9.40/share from Big Blue's coffers). IBM's stock price these days is homing in on $130, quite a nice turn of events given the global economy.

Sun is trading at $8.70, a significant discount to Oracle's $9.50 bid, reflecting investor worries about the fate of the deal now under scrutiny by European regulators, Mill's views notwithstanding.

IBM Software Group Vice President of Emerging Technology Rod Smith noted the irony -- perhaps ancient Greek tragedy-caliber irony -- that a low market share open source product is holding up the biggest commercial transaction of Sun's history. "That open source stuff is tricky on who actually makes money and how much," Smith chorused.

Should Mills's prediction that Oracle successfully maintains its bid for Sun prove incorrect, it could mean bankruptcy for Sun. And that may mean many of Sun's considerable intellectual property assets would go at fire-sale prices to ... perhaps a few piecemeal bidders, including IBM. Smith just smiled, easily shrugging off the chill (socks in tact) from the towering "IBM" logo ice sculpture a few steps away.

And wouldn't this hold up go away if Sun and/or Oracle jettisoned MySQL? Is it pride or hubris that makes a deal sour for one mere grape? Was the deal (and $7.4 billion) all about MySQL? Hardly.

Many observers think that Sun's Java technology -- and not its MySQL open source database franchise -- should be of primary concern to European (and U.S.) anti-trust mandarins. I have to agree. But Mills isn't too concerned with Oracle's probable iron-grip on Java ..., err licensing. IBM has a long-term license on the technology, the renewal of which is many years out. "We have plenty of time," said Mills.

Yes, plenty of time to make Apache Harmony a Java doppelganger -- not to mention the Java market-soothing effects of OSGi and Eclipse RCP. [Hey, IBM invented Java for the server for Sun, it can re-invent it for something else ... SAP?]

Unlike some software titans, Mills is clearly not living in a "reality distortion field" when it comes to Oracle's situation.

"We're in this for the long haul," said Mills, noting that he and IBM have have been competing with Oracle since August 1993 when IBM launched its distributed DB2 product. "All of our market share comes at the expense of Oracle's," said Mills. "And we love to do benchmarks again Oracle."

Even as the Fates seem to be on IBM's side nowadays, the stakes remain high for the users of these high-end database technologies and products. It's my contention that we're only now entering the true data-driven decade. And all that data needs to run somewhere. And it's not going to be in MySQL, no matter who ends up owning it.

Wednesday, November 18, 2009

HP offers slew of products and services to bring cost savings and better performance to virtual desktops

Hewlett-Packard (HP) this week unleashed a barrage of products aimed at delivering affordable and simple computing experiences to the desktop.

These include thin-client and desktop virtualization solutions, as well as a multi-seat offering that can double computing seats. At the same time, the company targeted the need for data security with a backup and recovery system for road warriors. [Disclosure: HP is a sponsor of BriefingsDirect podcasts.]

The thin-client offerings from the Palo Alto, Calif. company include the HP t5740 and HP t5745 Flexible Series, which feature Intel Atom N280 processors and an Intel GL40 chipset. They also provide eight USB 2.0 ports and an optional PCI expansion module for easy upgrades.

The Flexible Series thin clients support rich multimedia for visual display solutions, including the new HP LD4700 47-inch Widescreen LCD Digital Signage Display, which can run in both bright and dim lighting while maintaining longevity, and can be set in either a horizontal or vertical position. With the new HP Digital Signage Display (DSD) Wall Mount, users can hang the display on a wall to showcase videos, graphics or text in a variety of commercial settings where an extra-large screen is desired.

the new HP LD4700 47-inch Widescreen LCD Digital Signage Display, which can run in both bright and dim lighting while maintaining longevity, and can be set in either a horizontal or vertical position. With the new HP Digital Signage Display (DSD) Wall Mount, users can hang the display on a wall to showcase videos, graphics or text in a variety of commercial settings where an extra-large screen is desired.

The HP t5325 Essential Series Thin Client is a power-efficient thin client with a new interface that simplifies setup and deployment. All new HP thin clients include intuitive setup tools to streamline configuration and management. These include the ThinPro Setup Wizard for Linux and HP Easy Config for Microsoft Windows.

In addition, HP thin clients also include on-board utilities that automate deployment of new connections, properties, low-bandwidth add-ons, and image updates from one centralized repository to thousands of thin clients.

Client virtualization

Three new client virtualization architectures combine Citrix XenDesktop 4, Citrix XenApp or VMware View with HP ProLiant servers, storage and thin clients to provide midsize to large businesses with a range of scalable offerings.

HP ProLiant WS460c G6 Workstation Blade brings centralized, mission-critical security to workstation computing and allows individuals or teams to work and collaborate remotely and securely. This solution meets the performance and scalability needs for high-end visualization and handling of large model sizes demanded by enterprise segments such as engineering and oil and gas.

HP Client Automation 7.8, part of the HP Business Service Automation software portfolio allows customers to deploy and migrate to a virtual desktop infrastructure environment and manage it through the entire life cycle with a common methodology that reduces management costs and complexity. Customers also capture inventory and usage information to help size their initial virtual client deployment and reoptimize as end-user needs change over time.

The HP MultiSeat Solution stretches the computing budgets of small businesses and other resource-constrained organizations by delivering up to twice the computing seats as traditional PCs for the same IT spend.

HP MultiSeat uses the excess computing capacity of a single PC to give up to 10 simultaneous users an individualized computing experience. This is designed to help organizations affordably increase computing seats and provide a simple setup, as well as reduce energy consumption by as much as 80 percent per user over traditional PCs.

Data protection and backup

To address the problem of mobile workers -- now estimated at 25 percent of the workforce -- potentially losing company data, HP is offering HP Data Protector Notebook extension, which can back up and recover data outside the corporate network, even while the worker is working remotely and offline.

With the Data Protector, data is instantly captured and backed up automatically each time a user changes, creates or receives a files. The data is then stored temporarily in a local repository pending transfer to the network data vault for full backup and restore capabilities. With single-click recovery, users can recover their own files without initiating help desks calls.

De-duplication, data encryption, and compression techniques help to maximize bandwidth efficiency and ensure security. The user’s storage footprint is reduced by deduplication of multiple copies of data. All of the user’s data is then stored encrypted and compressed and the expired versions are cleaned up.

HP introduced HP Backup and Recovery Fast Track Services, a suite of scalable service engagements that help ensure a successful implementation of HP Data Protector and HP Data Protector Notebook Extension.

Workshops and services

To help companies chart their way to client virtualization, HP is also offering a series of workshops and services:

The t5325 Essential Series Thin Client starts at $199 and is expected to be available Dec. 1.

These include thin-client and desktop virtualization solutions, as well as a multi-seat offering that can double computing seats. At the same time, the company targeted the need for data security with a backup and recovery system for road warriors. [Disclosure: HP is a sponsor of BriefingsDirect podcasts.]

The thin-client offerings from the Palo Alto, Calif. company include the HP t5740 and HP t5745 Flexible Series, which feature Intel Atom N280 processors and an Intel GL40 chipset. They also provide eight USB 2.0 ports and an optional PCI expansion module for easy upgrades.

The Flexible Series thin clients support rich multimedia for visual display solutions, including

the new HP LD4700 47-inch Widescreen LCD Digital Signage Display, which can run in both bright and dim lighting while maintaining longevity, and can be set in either a horizontal or vertical position. With the new HP Digital Signage Display (DSD) Wall Mount, users can hang the display on a wall to showcase videos, graphics or text in a variety of commercial settings where an extra-large screen is desired.

the new HP LD4700 47-inch Widescreen LCD Digital Signage Display, which can run in both bright and dim lighting while maintaining longevity, and can be set in either a horizontal or vertical position. With the new HP Digital Signage Display (DSD) Wall Mount, users can hang the display on a wall to showcase videos, graphics or text in a variety of commercial settings where an extra-large screen is desired.The HP t5325 Essential Series Thin Client is a power-efficient thin client with a new interface that simplifies setup and deployment. All new HP thin clients include intuitive setup tools to streamline configuration and management. These include the ThinPro Setup Wizard for Linux and HP Easy Config for Microsoft Windows.

In addition, HP thin clients also include on-board utilities that automate deployment of new connections, properties, low-bandwidth add-ons, and image updates from one centralized repository to thousands of thin clients.

Client virtualization

Three new client virtualization architectures combine Citrix XenDesktop 4, Citrix XenApp or VMware View with HP ProLiant servers, storage and thin clients to provide midsize to large businesses with a range of scalable offerings.

HP ProLiant WS460c G6 Workstation Blade brings centralized, mission-critical security to workstation computing and allows individuals or teams to work and collaborate remotely and securely. This solution meets the performance and scalability needs for high-end visualization and handling of large model sizes demanded by enterprise segments such as engineering and oil and gas.

HP Client Automation 7.8, part of the HP Business Service Automation software portfolio allows customers to deploy and migrate to a virtual desktop infrastructure environment and manage it through the entire life cycle with a common methodology that reduces management costs and complexity. Customers also capture inventory and usage information to help size their initial virtual client deployment and reoptimize as end-user needs change over time.

The HP MultiSeat Solution stretches the computing budgets of small businesses and other resource-constrained organizations by delivering up to twice the computing seats as traditional PCs for the same IT spend.

HP MultiSeat uses the excess computing capacity of a single PC to give up to 10 simultaneous users an individualized computing experience. This is designed to help organizations affordably increase computing seats and provide a simple setup, as well as reduce energy consumption by as much as 80 percent per user over traditional PCs.

Data protection and backup

To address the problem of mobile workers -- now estimated at 25 percent of the workforce -- potentially losing company data, HP is offering HP Data Protector Notebook extension, which can back up and recover data outside the corporate network, even while the worker is working remotely and offline.

With the Data Protector, data is instantly captured and backed up automatically each time a user changes, creates or receives a files. The data is then stored temporarily in a local repository pending transfer to the network data vault for full backup and restore capabilities. With single-click recovery, users can recover their own files without initiating help desks calls.

De-duplication, data encryption, and compression techniques help to maximize bandwidth efficiency and ensure security. The user’s storage footprint is reduced by deduplication of multiple copies of data. All of the user’s data is then stored encrypted and compressed and the expired versions are cleaned up.

HP introduced HP Backup and Recovery Fast Track Services, a suite of scalable service engagements that help ensure a successful implementation of HP Data Protector and HP Data Protector Notebook Extension.

Workshops and services

To help companies chart their way to client virtualization, HP is also offering a series of workshops and services:

- The Transformation Experience Workshop is a one-day intensive session to help customers build their strategy for virtualized solutions, identify a high-level roadmap, and get executive consensus.

- The Business Benefit Workshop allows customers to identify, quantify and analyze the business benefits of client virtualization, as well as set return-on-investment targets prior to entering the planning stage.

- An Enhanced HP Solution Architecture and Pilot Service ensures the successful integration of the client virtualization solution into the customer’s infrastructure through a clear roadmap, architectural blueprint, and phased implementation strategy.

The t5325 Essential Series Thin Client starts at $199 and is expected to be available Dec. 1.

Elastra beefs up automation offering for enterprise cloud computing

Elastra Corp., which provides application infrastructure automation, has upped the ante with the announcement this week of Elastra Cloud Server (ECS) 2.0 Enterprise Edition. The new addition from the San Francisco company will help IT organizations leverage the economics of cloud computing, while preserving existing architectural practices and corporate policies.

the announcement this week of Elastra Cloud Server (ECS) 2.0 Enterprise Edition. The new addition from the San Francisco company will help IT organizations leverage the economics of cloud computing, while preserving existing architectural practices and corporate policies.

Relying on an increased level of automation, the enterprise edition:

Elastra offers a free edition of ECS running on Amazon Web Services and an enterprise edition for private data centers.

I was impressed with Elastra when I was initially briefed in 2007. They have many of the right features for what the cloud market will demand. More data centers will be deploying "private cloud" attributes, and those will become yet larger portions of modern data centers.

Relying on an increased level of automation, the enterprise edition:

- Automatically generates deployment plans and provisions sophisticated systems that are optimized to minimize operational and capital expenses. At the same time, applications are deployed to be compliant with the customers’ own sets of policies, procedures, and service level agreements (SLAs).

- Cuts the lead times IT needs to create complex development, testing, and production environments by automating the processes traditionally managed by hand or via hand-crafted scripts.

- Lets IT organizations maintain control of their operations using familiar tools and technologies while delivering on-demand, self-service system provisioning to their users.

Elastra offers a free edition of ECS running on Amazon Web Services and an enterprise edition for private data centers.

I was impressed with Elastra when I was initially briefed in 2007. They have many of the right features for what the cloud market will demand. More data centers will be deploying "private cloud" attributes, and those will become yet larger portions of modern data centers.

Monday, November 16, 2009

BriefingsDirect analysts discuss business commerce clouds: Wave of the future or old wine in a new bottle?

Listen to the podcast. Find it on iTunes/iPod and Podcast.com. View a full transcript, or download a copy. Charter Sponsor: Active Endpoints. Also sponsored by TIBCO Software.

Welcome to the latest BriefingsDirect Analyst Insights Edition, Vol. 46. Our topic for this episode of BriefingsDirect Analyst Insights Edition centers on "business commerce clouds." As the general notion of cloud computing continues to permeate the collective IT imagination, an offshoot vision holds that multiple business-to-business (B2B) players could use the cloud approach to build extended business process ecosystems.

It's sort of like a marketplace in the cloud on steroids, on someone else's servers, perhaps to engage on someone's business objectives, and maybe even satisfy some customers along the way. It's really a way to make fluid markets adapt at Internet speed, at low cost, to business requirements, as they come and go.

I, for one, can imagine a dynamic, elastic, self-defining, and self-directing business-services environment that wells up around the needs of a business group or niche, and then subsides when lack of demand dictates. Here's an early example of how it works, in this case for food recall.

The concept of this business commerce cloud was solidified for me just a few weeks ago, when I spoke to Tim Minahan, chief marketing officer at Ariba. I've invited Tim to join us to delve into the concept, and the possible attractions, of business commerce clouds. We're also joined by this episode's IT industry analyst guests: Tony Baer, senior analyst at Ovum; Brad Shimmin, principal analyst at Current Analysis; Jason Bloomberg, managing partner at ZapThink; JP Morgenthal, independent analyst and IT consultant, and Sandy Kemsley, independent IT analyst and architect. The discussion is moderated by me, Dana Gardner, principal analyst at Interarbor Solutions.

This periodic discussion and dissection of IT infrastructure related news and events, with a panel of industry analysts and guests, comes to you with the help of our charter sponsor, Active Endpoints, maker of the ActiveVOS, visual orchestration system, and through the support of TIBCO Software.

Here are some excerpts:

Special offer: Download a free, supported 30-day trial of Active Endpoint's ActiveVOS at www.activevos.com/insight.

Welcome to the latest BriefingsDirect Analyst Insights Edition, Vol. 46. Our topic for this episode of BriefingsDirect Analyst Insights Edition centers on "business commerce clouds." As the general notion of cloud computing continues to permeate the collective IT imagination, an offshoot vision holds that multiple business-to-business (B2B) players could use the cloud approach to build extended business process ecosystems.

It's sort of like a marketplace in the cloud on steroids, on someone else's servers, perhaps to engage on someone's business objectives, and maybe even satisfy some customers along the way. It's really a way to make fluid markets adapt at Internet speed, at low cost, to business requirements, as they come and go.

I, for one, can imagine a dynamic, elastic, self-defining, and self-directing business-services environment that wells up around the needs of a business group or niche, and then subsides when lack of demand dictates. Here's an early example of how it works, in this case for food recall.

The concept of this business commerce cloud was solidified for me just a few weeks ago, when I spoke to Tim Minahan, chief marketing officer at Ariba. I've invited Tim to join us to delve into the concept, and the possible attractions, of business commerce clouds. We're also joined by this episode's IT industry analyst guests: Tony Baer, senior analyst at Ovum; Brad Shimmin, principal analyst at Current Analysis; Jason Bloomberg, managing partner at ZapThink; JP Morgenthal, independent analyst and IT consultant, and Sandy Kemsley, independent IT analyst and architect. The discussion is moderated by me, Dana Gardner, principal analyst at Interarbor Solutions.

This periodic discussion and dissection of IT infrastructure related news and events, with a panel of industry analysts and guests, comes to you with the help of our charter sponsor, Active Endpoints, maker of the ActiveVOS, visual orchestration system, and through the support of TIBCO Software.

Here are some excerpts:

Minahan: When we talk about business commerce clouds, what we're talking about is leveragiListen to the podcast. Find it on iTunes/iPod and Podcast.com. View a full transcript, or download a copy. Charter Sponsor: Active Endpoints. Also sponsored by TIBCO Software.ng the cloud architecture to go to the next level. When folks traditionally think of the cloud or technology, they think of managing their own business processes. But, as we know, if we are going to buy, sell, or manage cash, you need to do that with at least one, if not more, third parties.

The business commerce cloud leverages cloud computing to deliver three things. It delivers the business process application itself as a cloud-based or a software-as-a-service (SaaS)-based service. It delivers a community of enabled trading partners that can quickly be discovered, connected to, and enable collaboration with them.

And, the third part is around capabilities --the ability to dial up or dial down, whether it be expertise, resources, or other predefined best practice business processes -- all through the cloud.

... Along the way, what we [at Ariba] found was that we were connecting all these parties through a shared network that we call the Ariba Supplier Network. We realized we weren't just creating value for the buyers, but we were creating value for the sellers.

They were pushing us to develop new ways for them to create new business processes on the shared infrastructure -- things like supply chain financing, working capital management, and a simple way to discover each other and assess who their next trading partners may be.

... In the past year, companies have processed $120 billion worth of purchased transactions and invoices over this network. Now, they're looking at new ways to find new trading partners -- particularly as the incidence of business bankruptcies are up -- as well as extend to new collaborations, whether it be sharing inventory or helping to manage their cash flow.

Baer: I think there are some very interesting possibilities, and in certain ways this is very much anevolutionary development that began with the introduction of EDI 40 or 45 years ago.

Actually, if you take a took at supply-chain practices among some of the more innovative sectors, especially consumer electronics, where you deal with an industry that's very volatile both by technology and consumer taste, this whole idea of virtualizing the supply chain, where different partners take on greater and greater roles in enabling each other, is very much a direct follow on to all that.

Roughly 10 years ago, when we were going though the Internet 1.0 or the dot-com revolution, we started getting into these B2B online trading hubs with the idea that we could use the Internet to dynamically connect with business partners and discover them. Part of this really seemed to go against the trend of supply-chain practice over the previous 20 years, which was really more to consolidate on a known group of partners as opposed to spontaneously connecting with them.

Shimmin: ... I look at this as an enabler, in a positive way. What the cloud does is allow what Tim was hinting at -- with more spontaneity, self-assembly, and visibility into supplychains in particular -- that you didn't really get before with the kind of locked down approach we had with EDI.

That's why I think you see so many of those pure-play EDI vendors like GXS, Sterling, SEEBURGER, Inovis, etc. not just opening up to the Internet, but opening up to some of the more cloudy standards like cXML and the like, and really doing a better job of behaving like we in the 2009-2010 realm expect a supply chain to behave, which is something that is much more open and much more visible.

Kemsley: ... I think it has huge potential, but one of the issues that I see is that so manycompanies are afraid to start to open up, to use external services as part of their mission-critical businesses, even though there is no evidence that a cloud-based service is any less reliable than their internal services. It's just that the failures that happen in the cloud are so much more publicized than their internal failures that there is this illusion that things in the cloud are not as stable.

There are also security concerns as well. I have been at a number of business process management (BPM) conferences in the last month, since this is conference season, and that is a recurring theme. Some of the BPM vendors are putting their products in the cloud so that you can run your external business processes purely in the cloud, and obviously connect to cloud-based services from those.

A lot of companies still have many, many problems with that from a security standpoint, even though there is no evidence that that's any less secure than what they have internally. So, although I think there is a lot of potential there, there are still some significant cultural barriers to adopting this.

Minahan: ... The cloud provider, because of the economies of scale they have, oftentimes provides better security and can invest more in security -- partitioning, and the like -- than many enterprises can deliver themselves. It's not just security. It's the other aspects of your architectural performance.

Bloomberg: ... I am coming at it from a skeptic's perspective. It doesn’t sound like there's anythingnew here. ... We're using the word "cloud" now, and we were talking about "business webs." I remember business webs were all the rage back when Ariba had their first generation of offerings, as well as Commerce One and some of the other players in that space.

Age-old challenges

The challenges then are still the challenges now. Companies don't necessarily like doing business with other organizations that they don't have established relationships with. The value proposition of the central marketplaces has been hammered out now. If you want to use one, they're already out there and they're already matured. If you don't want to use one, putting the word "cloud" on it is not going to make it any more appealing.

Morgenthal: ... Putting additional information in the cloud and making value out of thatadd some overall value to the cost of the information or the cost of running the system, so you can derive a few things. But, ultimately, the same problems that are needed to drive a community working together, doing business together, exchanging product through an exchange are still there.

... What's being done through these environments is the exchange of money and goods. And, it's the overhead related to doing that, that makes this complex. RollStream is another startup in the area that's trying to make waves by simplifying the complexities around exchanging the partner agreements and doing the trading partner management using collaborative capabilities. Again, the real complexity is the business itself. It's not even the business processes. The data is there.

... Technology is a means to an end. The end that's got to get fixed here isn't an app fix. It's a community fix. It's a "how business gets done" fix. Those processes are not automated. Those are human tasks.

Minahan: ... As it applies to the cloud and the commerce cloud, what's interesting here is the new services that can be available. It's different. It's not just about discovering new trading partners. It's about creating efficiencies and more effective commerce processes with those trading partners.

I'll give you a good example. I mentioned before about the Ariba Network with $111 billion worth of transactions and invoices being transferred over this every year for the past 10 years. That gives us a lot of intelligence that new companies are coming on board.

An example would be The Receivables Exchange. Traditionally sellers, if they wanted to get their cash fast, could factor the receivables at $0.25 on the dollar. This organization recognized the value of the information that was being transacted over this network and was able to create an entirely new service.

They were able to mitigate the risk, and provide supply chain financing at a much lower basis -- somewhere between two to four percent by using the historical information on those trading relationships, as well as understanding the stability of the buyer.What we're seeing with our customers is that the real benefits of the cloud come in three areas: productivity, agility, and innovation.

Because folks are in a shared infrastructure here that can be continually introduced, new services can be dialed up and dialed down. It's a lot different than a rigid EDI environment or just a discovery marketplace. ... What we're seeing with our customers is that the real benefits of the cloud come in three areas: productivity, agility, and innovation.

... When folks talk about cloud, they really think about the infrastructure, and what we are talking about here is a business service cloud.

Gartner calls it the business process utility, which ultimately is a form of technology-enabled business process outsourcing. It's not just the technology. The technology or the workflow is delivered in the cloud or as a web-based service, so there is no software, hardware, etc. for the trading partners to integrate, to deploy or maintain. That was the bane of EDI private VANs.

The second component is the community. Already having an established community of trading partners who are actually conducting business and transactions is key. I agree with the statement that it comes down to the humans and the companies having established agreements. But the point is that it can be built upon a large trading network that already exists.

The last part, which I think is missing here, and that's so interesting about the business commerce cloud, are the capabilities. It's the ability for either the solution provider or other third parties to deliver skills, expertise, and resources into the cloud as well as a web-based service.

It's also the information that can be garnered off the community to create new web-based services and capabilities that folks either don't have within their organization or don't have the ability or wherewithal to go out and develop and hire on their own. There is a big difference between cloud computing and these business service clouds that are growing.

Shimmin: ... The fuller picture is to look at this as a combination of [Apple App Store] and the Amazon marketplace. That's where I think you will see the most success with these commerce clouds -- a very specific community of like-minded suppliers and purchasers that want to get together and open their businesses up to one another.

... A community of companies wants to be able to come together affordably, so that the SMB can on-board an exchange at an affordable rate. That's really been the problem with most of these large-scale EDI solutions in the past. It's so expensive to bring on the smaller players that they can't play.

... When you have that sort of like-mindedness, you have the wherewithal to collaborate. But, the problem has always been finding the right people, getting to that knowledge that people have, and getting them to open it up. That's where the social networking side of this comes in. That's where I see the big EDI guns I was talking about and the more modernized renditions opening up to this whole Google Wave notion of what collaboration means in a social networking context.That's one key area -- being able to have the collaboration and social networking during the modeling of the processes.

Minahan: ... We're seeing that already through the exchange that we have amongst our customers or around our solutions. We're also seeing that in a lot of the social networking communities that we participate in around the exchange of best practices. The ability to instantiate that into reusable workflows is something that's certainly coming.

Folks are always asking these days, "We hear a lot about this cloud. What business processes or technologies should we put in the cloud?" When you talk about that, the most likely ones are inter-enterprise, whether they be around commerce, talent management, or customer management, it's what happens between enterprises where a shared infrastructure makes the most sense.

Special offer: Download a free, supported 30-day trial of Active Endpoint's ActiveVOS at www.activevos.com/insight.

ZapThink explores the four stages of SOA governance that lead to business agility

This guest post comes courtesy of Jason Bloomberg, managing partner at ZapThink.

By Jason Bloomberg

For several years now, ZapThink has spoken about SOA governance "in the narrow" vs. SOA governance" in the broad." SOA governance in the narrow refers to governance of the SOA initiative, and focuses primarily on the service lifecycle.

governance" in the broad." SOA governance in the narrow refers to governance of the SOA initiative, and focuses primarily on the service lifecycle.

When vendors try to sell you SOA governance gear, they're typically talking about SOA governance in the narrow. SOA governance in the broad, in contrast, refers to IT governance in the SOA context. In other words, how will SOA help with IT governance (and by extension, corporate governance) once your SOA initiative is up and running?

In both our Licensed ZapThink Architect Boot Camp as well as our newer SOA and Cloud Governance Course, we also point out how governance typically involves human communication-centric activities like architecture reviews, human management, and people deciding to comply with policies. We point out this human context for governance to contrast it to the technology context that inevitably becomes the focus of SOA governance in the narrow. There is an important technology-centric SOA governance story to be told, of course, as long as it's placed into the greater governance context.

One question we haven't yet addressed in depth, however, is how these two contrasts -- narrow vs. broad, human vs. technology -- fit together. Taking a closer look, there's an important trend taking shape, as organizations mature their approach to SOA governance, and with it, the overall SOA effort. Following this trend to its natural conclusion highlights some important facts about SOA, and can help organizations understand where they want to end up as their SOA initiative reaches its highest levels of maturity.

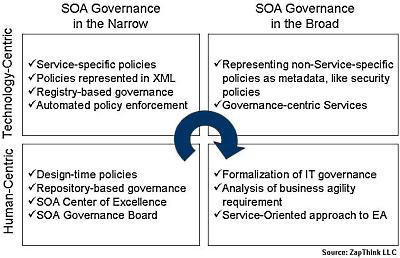

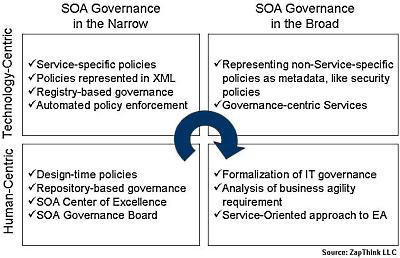

Introducing the SOA governance grid

Whenever faced with to orthogonal contrasts, the obvious thing to do is put them in a grid. Let's see what we can learn from such a diagram:

First, let's take a look at what each square contains, starting with the lower left corner and moving clockwise, because as we'll see, that's the sequence that corresponds best to increasing levels of SOA maturity.

1. Human-centric SOA governance in the narrow

As organizations first look at SOA and the governance challenge it presents, they must decide how they want to handle various governance issues. They must set up a SOA governance board or other committee to make broad SOA policy decisions. We also recommend setting up a SOA Center of Excellence to coordinate such policies across the whole enterprise.

These policy decisions initially focus on how to address business requirements, how to assemble and coordinate the SOA team, and what the team will need to do as they ramp up the SOA effort. The output of such SOA governance activities tend to be written documents and plenty of conversations and meetings.

The tools architects use for this stage are primarily communication-centric, namely word processors and portals and the like. But this stage is also when the repository comes into play as a place to put many such design time artifacts, and also where architects configure design time workflows for the SOA team. Technology, however, plays only a supporting role in this stage.

2. Technology-centric SOA governance in the narrow

As the SOA effort ramps up, the focus naturally shifts to technology. Governance activities center on the registry/repository and the rest of the SOA governance gear. Architects roll up their sleeves and hammer out technology-centric policies, preferably in an XML format that the gear can understand. Representing certain policies as metadata enables automated communication and enforcement of those policies, and also makes it more straightforward to change those policies over time.

This stage is also when run time SOA governance begins. Certain policies must be enforced at run time, either within the underlying runtime environment, in the management tool, or in the security infrastructure. At this point the SOA registry becomes a central governance tool, because it provides a single discovery point for run time policies. Tool-based interoperability also rises to the fore, as WS-I compliance, as well as compliance with the Governance Interoperability Framework or the CentraSite Community become essential governance policies.

3. Technology-centric SOA governance in the broad

The SOA implementation is up and running. There are a number of services in production, and their lifecycle is fully governed through hard work and proper architectural planning. Taking the SOA approach to responding to new business requirements is becoming the norm. So, when new requirements mean new policies, it's possible to represent some of them as metadata as well, even though the policies aren't specific to SOA.

Such policies are still technology-centric, for example, security policies or data governance policies or the like. Fortunately, the SOA governance infrastructure is up to the task of managing, communicating, and coordinating the enforcement of such policies. By leveraging SOA, it's possible to centralize policy creation and communication, even for policies that aren't SOA-specific.

Sometimes, in fact, new governance requirements can best be met with new services. For example, a new regulatory requirement might lead to a new message auditing policy. Why not build a service to take care of that? This example highlights what we mean by SOA governance in the broad. SOA is in place, so when a new governance requirement comes over the wall, we naturally leverage SOA to meet that requirement.

4. Human-centric SOA governance in the broad

This final stage is the most thought-provoking of all, because it represents the highest maturity level. How can SOA help with the human activities that form the larger picture of governance in the organization? Clearly, XML representations of technical policies aren't the answer here. Rather, it's how implementing SOA helps expand the governance role architecture plays in the organization. It's a core best practice that architecture should drive IT governance. When the organization has adopted SOA, then SOA helps to inform best practices for IT governance overall.

The impact of SOA on enterprise architecture (EA) is also quite significant. Now that EAs increasingly realize that SOA is a style of EA, EA governance is becoming increasingly service-orientated in form as well. It is at this stage that part of the SOA governance value-proposition benefits the business directly, by formalizing how the enterprise represents capabilities consistent with the priorities of the organization.

The ZapThink take

The big win to moving to the fourth stage is in how leveraging SOA approaches to formalize EA governance impacts the organization's business agility requirement. In some ways business agility is like any other business requirement, in that proper business analysis can delineate the requirement to the point that the technology team can deliver it, the quality team can test for it, and the infrastructure can enforce it. But as we've written before, as an emergent property of the implementation, business agility is a different sort of requirement from more traditional business requirements in a fundamental way.

A critical part of achieving this business agility over time is to break down the business agility requirement into a set of policies, and then establish, communicate, and enforce those policies -- in other words, provide business agility governance. Only now, we're not talking about technology at all. We're talking about transforming how the organization leverages resources in a more agile manner by formalizing its approach to governance by following SOA best practices at the EA level. Organizations must understand the role SOA governance plays in achieving this long-term strategic vision for the enterprise.

This guest post comes courtesy of Jason Bloomberg, managing partner at ZapThink.

SOA and EA Training, Certification,

and Networking Events

In need of vendor-neutral, architect-level SOA and EA training? ZapThink's Licensed ZapThink Architect (LZA) SOA Boot Camps provide four days of intense, hands-on architect-level SOA training and certification.

Advanced SOA architects might want to enroll in ZapThink's SOA Governance and Security training and certification courses. Or, are you just looking to network with your peers, interact with experts and pundits, and schmooze on SOA after hours? Join us at an upcoming ZapForum event. Find out more and register for these events at http://www.zapthink.com/eventreg.html.

By Jason Bloomberg

For several years now, ZapThink has spoken about SOA governance "in the narrow" vs. SOA

When vendors try to sell you SOA governance gear, they're typically talking about SOA governance in the narrow. SOA governance in the broad, in contrast, refers to IT governance in the SOA context. In other words, how will SOA help with IT governance (and by extension, corporate governance) once your SOA initiative is up and running?

In both our Licensed ZapThink Architect Boot Camp as well as our newer SOA and Cloud Governance Course, we also point out how governance typically involves human communication-centric activities like architecture reviews, human management, and people deciding to comply with policies. We point out this human context for governance to contrast it to the technology context that inevitably becomes the focus of SOA governance in the narrow. There is an important technology-centric SOA governance story to be told, of course, as long as it's placed into the greater governance context.

One question we haven't yet addressed in depth, however, is how these two contrasts -- narrow vs. broad, human vs. technology -- fit together. Taking a closer look, there's an important trend taking shape, as organizations mature their approach to SOA governance, and with it, the overall SOA effort. Following this trend to its natural conclusion highlights some important facts about SOA, and can help organizations understand where they want to end up as their SOA initiative reaches its highest levels of maturity.

Introducing the SOA governance grid

Whenever faced with to orthogonal contrasts, the obvious thing to do is put them in a grid. Let's see what we can learn from such a diagram:

The ZapThink SOA governance grid

First, let's take a look at what each square contains, starting with the lower left corner and moving clockwise, because as we'll see, that's the sequence that corresponds best to increasing levels of SOA maturity.

1. Human-centric SOA governance in the narrow

As organizations first look at SOA and the governance challenge it presents, they must decide how they want to handle various governance issues. They must set up a SOA governance board or other committee to make broad SOA policy decisions. We also recommend setting up a SOA Center of Excellence to coordinate such policies across the whole enterprise.

These policy decisions initially focus on how to address business requirements, how to assemble and coordinate the SOA team, and what the team will need to do as they ramp up the SOA effort. The output of such SOA governance activities tend to be written documents and plenty of conversations and meetings.

The tools architects use for this stage are primarily communication-centric, namely word processors and portals and the like. But this stage is also when the repository comes into play as a place to put many such design time artifacts, and also where architects configure design time workflows for the SOA team. Technology, however, plays only a supporting role in this stage.

2. Technology-centric SOA governance in the narrow

As the SOA effort ramps up, the focus naturally shifts to technology. Governance activities center on the registry/repository and the rest of the SOA governance gear. Architects roll up their sleeves and hammer out technology-centric policies, preferably in an XML format that the gear can understand. Representing certain policies as metadata enables automated communication and enforcement of those policies, and also makes it more straightforward to change those policies over time.

This stage is also when run time SOA governance begins. Certain policies must be enforced at run time, either within the underlying runtime environment, in the management tool, or in the security infrastructure. At this point the SOA registry becomes a central governance tool, because it provides a single discovery point for run time policies. Tool-based interoperability also rises to the fore, as WS-I compliance, as well as compliance with the Governance Interoperability Framework or the CentraSite Community become essential governance policies.

3. Technology-centric SOA governance in the broad

The SOA implementation is up and running. There are a number of services in production, and their lifecycle is fully governed through hard work and proper architectural planning. Taking the SOA approach to responding to new business requirements is becoming the norm. So, when new requirements mean new policies, it's possible to represent some of them as metadata as well, even though the policies aren't specific to SOA.

Such policies are still technology-centric, for example, security policies or data governance policies or the like. Fortunately, the SOA governance infrastructure is up to the task of managing, communicating, and coordinating the enforcement of such policies. By leveraging SOA, it's possible to centralize policy creation and communication, even for policies that aren't SOA-specific.

Sometimes, in fact, new governance requirements can best be met with new services. For example, a new regulatory requirement might lead to a new message auditing policy. Why not build a service to take care of that? This example highlights what we mean by SOA governance in the broad. SOA is in place, so when a new governance requirement comes over the wall, we naturally leverage SOA to meet that requirement.

4. Human-centric SOA governance in the broad

This final stage is the most thought-provoking of all, because it represents the highest maturity level. How can SOA help with the human activities that form the larger picture of governance in the organization? Clearly, XML representations of technical policies aren't the answer here. Rather, it's how implementing SOA helps expand the governance role architecture plays in the organization. It's a core best practice that architecture should drive IT governance. When the organization has adopted SOA, then SOA helps to inform best practices for IT governance overall.

The impact of SOA on enterprise architecture (EA) is also quite significant. Now that EAs increasingly realize that SOA is a style of EA, EA governance is becoming increasingly service-orientated in form as well. It is at this stage that part of the SOA governance value-proposition benefits the business directly, by formalizing how the enterprise represents capabilities consistent with the priorities of the organization.

The ZapThink take

The big win to moving to the fourth stage is in how leveraging SOA approaches to formalize EA governance impacts the organization's business agility requirement. In some ways business agility is like any other business requirement, in that proper business analysis can delineate the requirement to the point that the technology team can deliver it, the quality team can test for it, and the infrastructure can enforce it. But as we've written before, as an emergent property of the implementation, business agility is a different sort of requirement from more traditional business requirements in a fundamental way.

A critical part of achieving this business agility over time is to break down the business agility requirement into a set of policies, and then establish, communicate, and enforce those policies -- in other words, provide business agility governance. Only now, we're not talking about technology at all. We're talking about transforming how the organization leverages resources in a more agile manner by formalizing its approach to governance by following SOA best practices at the EA level. Organizations must understand the role SOA governance plays in achieving this long-term strategic vision for the enterprise.

This guest post comes courtesy of Jason Bloomberg, managing partner at ZapThink.

SOA and EA Training, Certification,

and Networking Events

In need of vendor-neutral, architect-level SOA and EA training? ZapThink's Licensed ZapThink Architect (LZA) SOA Boot Camps provide four days of intense, hands-on architect-level SOA training and certification.

Advanced SOA architects might want to enroll in ZapThink's SOA Governance and Security training and certification courses. Or, are you just looking to network with your peers, interact with experts and pundits, and schmooze on SOA after hours? Join us at an upcoming ZapForum event. Find out more and register for these events at http://www.zapthink.com/eventreg.html.

Monday, November 9, 2009

Part 3 of 4: Web data services--Here's why text-based content access and management plays crucial role in real-time BI

Listen to the podcast. Find it on iTunes/iPod and Podcast.com. View a full transcript or download a copy. Learn more. Sponsor: Kapow Technologies.

Text-based content and information from across the Web are growing in importance to businesses. The need to analyze web-based text in real-time is rising to where structured data was in importance just several years ago.

Indeed, for businesses looking to do even more commerce and community building across the Web, text access and analytics forms a new mother lode of valuable insights to mine.

As the recession forces the need to identify and evaluate new revenue sources, businesses need to capture such web data services for their business intelligence (BI) to work better, deeper, and faster.

In this podcast discussion, Part 3 of a series on web data services for BI, we discuss how an ecology of providers and a variety of content and data types come together in several use-case scenarios.

In Part 1 of our series we discussed how external data has grown in both volume and importance across the Internet, social networks, portals, and applications. In Part 2, we dug even deeper into how to make the most of web data services for BI, along with the need to share those web data services inferences quickly and easily.

Our panel now looks specifically at how near real-time text analytics fills out a framework of web data services that can form a whole greater than the sum of the parts, and this brings about a whole new generation of BI benefits and payoffs.

To help explain the benefits of text analytics and their context in web data services, we're joined by Seth Grimes, principal consultant at Alta Plana Corp., and Stefan Andreasen, co-founder and chief technology officer at Kapow Technologies. The discussion is moderated by me, Dana Gardner, principal analyst at Interarbor Solutions.

Here are some excerpts:

Text-based content and information from across the Web are growing in importance to businesses. The need to analyze web-based text in real-time is rising to where structured data was in importance just several years ago.

Indeed, for businesses looking to do even more commerce and community building across the Web, text access and analytics forms a new mother lode of valuable insights to mine.

As the recession forces the need to identify and evaluate new revenue sources, businesses need to capture such web data services for their business intelligence (BI) to work better, deeper, and faster.

In this podcast discussion, Part 3 of a series on web data services for BI, we discuss how an ecology of providers and a variety of content and data types come together in several use-case scenarios.

In Part 1 of our series we discussed how external data has grown in both volume and importance across the Internet, social networks, portals, and applications. In Part 2, we dug even deeper into how to make the most of web data services for BI, along with the need to share those web data services inferences quickly and easily.

Our panel now looks specifically at how near real-time text analytics fills out a framework of web data services that can form a whole greater than the sum of the parts, and this brings about a whole new generation of BI benefits and payoffs.

To help explain the benefits of text analytics and their context in web data services, we're joined by Seth Grimes, principal consultant at Alta Plana Corp., and Stefan Andreasen, co-founder and chief technology officer at Kapow Technologies. The discussion is moderated by me, Dana Gardner, principal analyst at Interarbor Solutions.

Here are some excerpts:

Grimes: "Noise free" is an interesting and difficult concept when you're dealing with text, because text is just a form of human communication. Whether it's writtenListen to the podcast. Find it on iTunes/iPod and Podcast.com. View a full transcript or download a copy. Learn more. Sponsor: Kapow Technologies.materials, or spoken materials that have been transcribed into text, human communications are incredibly chaotic ... and they are full of "noise." So really getting to something that's noise-free is very ambitious.

... It's become an imperative to try to deal with the great volume of text -- the fire hose, as you said -- of information that's coming out. And, it's coming out in many, many different languages, not just in English, but in other languages. It's coming out 24 hours a day, 7 days a week -- not only when your business analysts are working during your business day. People are posting stuff on the web at all hours. They are sending email at all hours.If you want to keep up, if you want to do what business analysts have been referring to as a 360-degree analysis of information, you've got to have automated technologies to do it.

... There are hundreds of millions of people worldwide who are on the Internet, using email, and so on. There are probably even more people who are using cell phones, text messaging, and other forms of communication.

If you want to keep up, if you want to do what business analysts have been referring to as a 360-degree analysis of information, you've got to have automated technologies to do it. You simply can't cope with the flood of information without them.

Fortunately, the software is now up to the job in the text analytics world. It's up to the job of making sense of the huge flood of information from all kinds of diverse sources, high volume, 24 hours a day. We're in a good place nowadays to try to make something of it with these technologies.

Andreasen: ... There is also a huge amount of what I call "deep web," very valuable information that you have toget to in some other way. That's where we come in and allow you to build robots that can go to the deep web and extract information.

... Eliminating noise is getting rid of all this stuff around the article that is really irrelevant, so you get better results.

The other thing around noise-free is the structure. ... The key here is to get noise-free data and to get full data. It's not only to go to the deep web, but also get access to the data in a noise-free way, and in at least a semi-structured way, so that you can do better text analysis, because text analysis is extremely dependent on the quality of data.

Grimes: ... [There are] many different use-cases for text analytics. This is not only on the Web, but within the enterprise as well, and crossing the boundary between the Web and the inside of the enterprise.

Those use-cases can be the early warning of a Swine flu epidemic or other medical issues. You can be sure that there is text analytics going on with Twitter and other instant messaging streams and forums to try to detect what's going on.

... You also have brand and reputation management. If someone has started posting something very negative about your company or your products, then you want to detect that really quickly. You want early warning, so that you can react to it really quickly.We have some great challenges out there, but . . . we have great technologies to respond to those challenges.

We have a great use case in the intelligence world. That's one of the earliest adopters of text analytics technology. The idea is that if you are going to do something to prevent a terrorist attack, you need to detect and respond to the signals that are out there, that something is pending really quickly, and you have to have a high degree of certainty that you're looking at the right thing and that you're going to react appropriately.

... Text analytics actually predate BI. The basic approaches to analyzing textual sources were defined in the late '50s. Actually, there is a paper from an IBM researcher from 1958, that defines BI as the analysis of textual sources.

...[Now] we want to take a subset of all of the information that's out there in the so-called digital universe and bring in only what's relevant to our business problems at hand. Having the infrastructure in place to do that is a very important aspect here.

Once we have that information in hand, we want to analyze it. We want to do what's called information extraction, entity extraction. We want to identify the names of people, geographical location, companies, products, and so on. We want to look for pattern-based entities like dates, telephone numbers, addresses. And, we want to be able to extract that information from the textual sources.

Suitable technologies

All of this sounds very scientific and perhaps abstruse -- and it is. But, the good message here is one that I have said already. There are now very good technologies that are suitable for use by business analysts, by people who aren't wearing those white lab coats and all of that kind of stuff. The technologies that are available now focus on usability by people who have business problems to solve and who are not going to spend the time learning the complexities of the algorithms that underlie them.

Andreasen: ... Any BI or any text analysis is no better than the data source behind it. There are four extremely important parameters for the data sources. One is that you have the right data sources.

There are so many examples of people making these kind of BI applications, text analytics applications, while settling for second-tier data sources, because they are the only ones they have. This is one area where Kapow Technologies comes in. We help you get exactly the right data sources you want.

The other thing that's very important is that you have a full picture of the data. So, if you have data sources that are relevant from all kinds of verticals, all kinds of media, and so on, you really have to be sure you have a full coverage of data sources. Getting a full coverage of data sources is another thing that we help with.

Noise-free data

We already talked about the importance of noise-free data to ensure that when you extract data from your data source, you get rid of the advertisements and you try to get the major information in there, because it's very valuable in your text analysis.

Of course, the last thing is the timeliness of the data. We all know that people who do stock research get real-time quotes. They get it for a reason, because the newer the quotes are, the surer they can look into the crystal ball and make predictions about the future in a few seconds.

The world is really changing around us. Companies need to look into the crystal ball in the nearer and nearer future. If you are predicting what happens in two years, that doesn't really matter. You need to know what's happening tomorrow.

Thursday, November 5, 2009

Role of governance plumbed in Nov. 10 webinar on managing hybrid and cloud computing types

I'll be joining John Favazza, vice president of research and development at WebLayers, on Nov. 10 for a webinar on the critical role of governance in managing hybrid cloud computing environments.

The free, live webinar begins at 2 p.m. EDT. Register at https://www2.gotomeeting.com/register/695643130. [Disclosure: WebLayers is a sponsor of BriefingsDirect podcasts.]

Titled "How Governance Gets You More Mileage from Your Hybrid Computing Environment,” the webinar targets enterprise IT managers, architects and developers interested in governance for infrastructures that include hybrids of cloud computing, software as a service (saaS) and service-oriented architectures (SOA). There will be plenty of opportunity to ask questions and join the discussion.

Organizations are looking for more consistency across IT-enabled enterprise activities, and are finding competitive differentiation in being able to best manage their processes more effectively. That benefit, however, requires the ability to govern across different types of systems and infrastructure and applications delivery models. Enforcing policies, and implementing comprehensive governance, acts to enhance business modeling, additional services orientation, process refinement, and general business innovation.

Increasingly, governance of hybrid computing environments establishes the ground rules under which business activities and processes -- supported by multiple and increasingly diverse infrastructure models -- operate.

Developing and maintaining governance also fosters collaboration between architects, those building processes and solutions for companies, and those operating the infrastructure -- be it supported within the enterprise or outside. It also sets up multi-party business processes, across company boundaries, with coordinated partners.

Cambridge, Mass.-based WebLayers provides a design-time governance platform that helps centralize policy management across multiple IT domains -- from SOA through mainframe and cloud implementations. Such governance clearly works to reduce the costs of managing and scaling such environments, individually and in combination.

In the webinar we'll look at how structured policies, including extensions across industry standards, speeds governance implementations and enforcement -- from design-time through ongoing deployment and growth.

So join me and Favazza and me at 2 p.m. ET on Nov. 10 by registering at https://www2.gotomeeting.com/register/695643130.

The free, live webinar begins at 2 p.m. EDT. Register at https://www2.gotomeeting.com/register/695643130. [Disclosure: WebLayers is a sponsor of BriefingsDirect podcasts.]

Titled "How Governance Gets You More Mileage from Your Hybrid Computing Environment,” the webinar targets enterprise IT managers, architects and developers interested in governance for infrastructures that include hybrids of cloud computing, software as a service (saaS) and service-oriented architectures (SOA). There will be plenty of opportunity to ask questions and join the discussion.

Organizations are looking for more consistency across IT-enabled enterprise activities, and are finding competitive differentiation in being able to best manage their processes more effectively. That benefit, however, requires the ability to govern across different types of systems and infrastructure and applications delivery models. Enforcing policies, and implementing comprehensive governance, acts to enhance business modeling, additional services orientation, process refinement, and general business innovation.

Increasingly, governance of hybrid computing environments establishes the ground rules under which business activities and processes -- supported by multiple and increasingly diverse infrastructure models -- operate.

Developing and maintaining governance also fosters collaboration between architects, those building processes and solutions for companies, and those operating the infrastructure -- be it supported within the enterprise or outside. It also sets up multi-party business processes, across company boundaries, with coordinated partners.

Cambridge, Mass.-based WebLayers provides a design-time governance platform that helps centralize policy management across multiple IT domains -- from SOA through mainframe and cloud implementations. Such governance clearly works to reduce the costs of managing and scaling such environments, individually and in combination.

In the webinar we'll look at how structured policies, including extensions across industry standards, speeds governance implementations and enforcement -- from design-time through ongoing deployment and growth.

So join me and Favazza and me at 2 p.m. ET on Nov. 10 by registering at https://www2.gotomeeting.com/register/695643130.

Wednesday, November 4, 2009

HP takes converged infrastructure a notch higher with new data warehouse appliance

Hewlett-Packard (HP) on Wednesday announced new products, solutions and services that leaves the technology packaging to them, so users don't have to.

HP Neoview Advantage, HP Converged Infrastructure Architecture, and HP Converged Infrastructure Consulting Services are designed to help organizations drive business and technology innovations at lower total cost via lower total hassle. [Disclosure: HP is a sponsor of BriefingsDirect podcasts.]

HP’s measured focus

HP isn’t just betting on a market whim. Recent market research it supported reveals that more than 90 percent of senior business decision makers believe business cycles will continue to be unpredictable for the next few years — and 80 percent recognize they need to be far more flexible in how they leverage technology for business.

that more than 90 percent of senior business decision makers believe business cycles will continue to be unpredictable for the next few years — and 80 percent recognize they need to be far more flexible in how they leverage technology for business.

The same old IT song and dance doesn't seem to be what these businesses are seeking. Nearly 85 percent of those surveyed cited innovation as critical to success, and 71 percent said they would sanction more technology investments -- if they could see how those investments met their organization’s time-to-market and business opportunity needs.

Cost nowadays is about a lot more than the rack and license. The fuller picture of labor, customization, integration, shared services suppport, data-use-tweaking and inevitable unforeseen gotchas need to be better managed in unison -- if that desired agility can also be afforded (and sanctioned by the bean-counters).

HP said its new offerings deliver three key advantages:

First up, HP Neoview Advantage, the new release of the HP Neoview enterprise data warehouse platform, which aims to help organizations respond to business events more quickly by supporting real-time insight and decision-making.

HP calls the performance, capacity, footprint and manageability improvements dramatic and says the software also reduces the total cost of ownership (TCO) associated with industry-standard components and pre-built, pre-tested configurations optimized for warehousing.

HP Neoview Advantage and last year's Exadata product (produced in partnership with Oracle) seem to be aimed at different segments. Currently, HP Neoview Advantage is a "very high end database," whereas Exadata is designed for "medium to large enterprises," and does not scale to the Neoview level, said Deb Nelson, senior vice president, Marketing, HP Enterprise Business.

A converged infrastructure

Next up, HP Converged Infrastructure architecture. As HP describes it, the architecture adjusts to meet changing business needs, specifically what HP calls “IT sprawl,” which it points to as the key culprit in raising technology costs for maintenance that could otherwise be used for innovation.

HP touts key benefits of this new architecture. First, the ability to deploy application environments on the fly through shared service management, followed closely by lower network costs and less complexity. The new architecture is optimized through virtual resource pools and also improves energy integration and effectiveness across the data center by tapping into data center smart grid technology.

Finally, HP is offering Converged Infrastructure Consulting Services that aim to help customers transition from isolated product-centric technologies to a more flexible converged infrastructure. The new services leverage HP’s experience in shared services, cloud computing, and data center transformation projects to let customers design, test and implement scalable infrastructures.

Overall, typical savings of 30 percent in total costs can be achieved by implementing Data Center Smart Grid technologies and solutions, said HP.

With these moves to converged infrastructure, HP is filling out where others are newly treading. Cisco and EMC this week announced packaging partnerships that seek to deliver simiar convergence benefits to the market.

"It's about experience, not an experiment," said Nelson.

BriefingsDirect contributor Jennifer LeClaire provided editorial assistance and research on this post.

HP Neoview Advantage, HP Converged Infrastructure Architecture, and HP Converged Infrastructure Consulting Services are designed to help organizations drive business and technology innovations at lower total cost via lower total hassle. [Disclosure: HP is a sponsor of BriefingsDirect podcasts.]

HP’s measured focus

HP isn’t just betting on a market whim. Recent market research it supported reveals

that more than 90 percent of senior business decision makers believe business cycles will continue to be unpredictable for the next few years — and 80 percent recognize they need to be far more flexible in how they leverage technology for business.

that more than 90 percent of senior business decision makers believe business cycles will continue to be unpredictable for the next few years — and 80 percent recognize they need to be far more flexible in how they leverage technology for business.The same old IT song and dance doesn't seem to be what these businesses are seeking. Nearly 85 percent of those surveyed cited innovation as critical to success, and 71 percent said they would sanction more technology investments -- if they could see how those investments met their organization’s time-to-market and business opportunity needs.

Cost nowadays is about a lot more than the rack and license. The fuller picture of labor, customization, integration, shared services suppport, data-use-tweaking and inevitable unforeseen gotchas need to be better managed in unison -- if that desired agility can also be afforded (and sanctioned by the bean-counters).

HP said its new offerings deliver three key advantages:

- Improved competitiveness and risk mitigation through business data management, information governance, and business analytics

- Faster time to revenue for new goods and services

- The ability to return to peak form, after being compressed or stretched.

First up, HP Neoview Advantage, the new release of the HP Neoview enterprise data warehouse platform, which aims to help organizations respond to business events more quickly by supporting real-time insight and decision-making.

HP calls the performance, capacity, footprint and manageability improvements dramatic and says the software also reduces the total cost of ownership (TCO) associated with industry-standard components and pre-built, pre-tested configurations optimized for warehousing.

HP Neoview Advantage and last year's Exadata product (produced in partnership with Oracle) seem to be aimed at different segments. Currently, HP Neoview Advantage is a "very high end database," whereas Exadata is designed for "medium to large enterprises," and does not scale to the Neoview level, said Deb Nelson, senior vice president, Marketing, HP Enterprise Business.

A converged infrastructure

Next up, HP Converged Infrastructure architecture. As HP describes it, the architecture adjusts to meet changing business needs, specifically what HP calls “IT sprawl,” which it points to as the key culprit in raising technology costs for maintenance that could otherwise be used for innovation.

HP touts key benefits of this new architecture. First, the ability to deploy application environments on the fly through shared service management, followed closely by lower network costs and less complexity. The new architecture is optimized through virtual resource pools and also improves energy integration and effectiveness across the data center by tapping into data center smart grid technology.

Finally, HP is offering Converged Infrastructure Consulting Services that aim to help customers transition from isolated product-centric technologies to a more flexible converged infrastructure. The new services leverage HP’s experience in shared services, cloud computing, and data center transformation projects to let customers design, test and implement scalable infrastructures.

Overall, typical savings of 30 percent in total costs can be achieved by implementing Data Center Smart Grid technologies and solutions, said HP.

With these moves to converged infrastructure, HP is filling out where others are newly treading. Cisco and EMC this week announced packaging partnerships that seek to deliver simiar convergence benefits to the market.

"It's about experience, not an experiment," said Nelson.

BriefingsDirect contributor Jennifer LeClaire provided editorial assistance and research on this post.

Subscribe to:

Posts (Atom)